How Large Concept Models (LCMs) Are Redefining AI

Dive into the world of Large Concept Models (LCMs), a groundbreaking AI advancement & comparing it with Large Language Models (LLMs). Explore how LCMs process information in a more human-like way, enabling enhanced coherence, efficiency and zero-shot generalization. Discover their potential applications, limitations and ethical considerations and glimpse into the future of AI with LCMs.

TECH DRIVEN FUTURE

Snehanshu Jena

1/14/20254 min read

We've all seen how Large Language Models (LLMs) have revolutionized AI. They write stories, translate languages, and answer our questions in ways we never thought possible. But even with all their impressive abilities, LLMs have limitations. That's where Large Concept Models (LCMs) come in. This new generation of AI offers a fresh perspective, promising to overcome those limitations and reshape our understanding of intelligent machines.

I was going through Meta’s research paper on LCMs and its really interesting and has a huge potential. In this blog post, we'll take a deep dive into the world of LCMs. We'll explore what they are, how they differ from LLMs, their exciting potential applications, and the challenges and ethical considerations that come with this new technology.

What Makes LCMs Different?

Imagine trying to understand a story by looking at individual letters instead of words or sentences. That's essentially how LLMs work – they focus on individual words or sub-word units called "tokens." LCMs, on the other hand, see the bigger picture. They operate on the level of "concepts," which are like abstract representations of ideas or actions.

Think of it this way: the sentence "The dog chased the ball" would be represented as a single concept in an LCM, capturing the entire action and relationship between the dog and the ball. An LLM, however, would process this sentence as a sequence of individual tokens ("The", "dog", "chased", "the", "ball").

This key difference in how LCMs and LLMs process information gives LCMs some major advantages:

Enhanced Coherence: LCMs generate more coherent and consistent text because they focus on the bigger picture. They can better maintain context and relationships between ideas, resulting in more natural and meaningful outputs, especially in long-form content.

Improved Efficiency: LCMs can process information more efficiently, especially in long documents, as they work with shorter sequences of sentence embeddings compared to lengthy token sequences.

Zero-Shot Generalization: LCMs can perform tasks in languages or modalities they weren't explicitly trained on, thanks to their concept-based approach. This means an LCM trained on English text could potentially generate summaries in Spanish or even understand and respond to spoken language.

Hierarchical Reasoning: Unlike the linear, sequential reasoning of LLMs, LCMs exhibit hierarchical reasoning capabilities, similar to humans. This means they can "think in layers," starting with the overall concept and then refining the details, much like how we might outline an essay before writing it.

Visualizing LCMs: A Deeper Look Inside

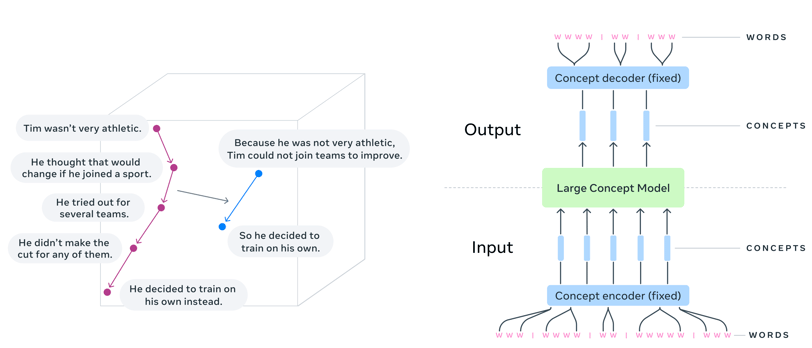

To really understand how LCMs work, it helps to visualize their process. Here's a figure from Meta's research that illustrates concept-based reasoning in action:

(Figure Reference: from Meta’s research paper)

Here's what's going on in this figure:

Concept-based Reasoning (Left side): Instead of analyzing individual words like LLMs, LCMs identify and connect "concepts" or ideas within the text. This allows them to understand the overall meaning and relationships between different parts of the text, leading to more coherent and meaningful outputs.

LCM Architecture (Right side): This shows the basic structure of an LCM. It has a concept encoder that converts words into concepts, and a concept decoder that generates text from these concepts. The "Large Concept Model" in the middle is the heart of the system, where the understanding and generation of concepts take place.

How are LCMs Trained?

Training LCMs is all about teaching them to predict the next concept in a sequence, given the preceding concepts. This is done using a few different techniques, including:

Diffusion models: These models learn to predict the next concept embedding by gradually refining a random starting point, much like an artist might gradually refine a sketch into a finished painting.

Quantization: This involves converting the continuous concept embeddings into discrete units, making it easier for the LCM to process and generate concepts.

What Can LCMs Do?

The unique capabilities of LCMs open up a world of possibilities:

Multilingual Summarization: LCMs have shown impressive performance in summarizing text across multiple languages, even those they weren't specifically trained on. Imagine the impact this could have on global communication and information sharing!

Summary Expansion: LCMs can take a short summary and expand it into a detailed and coherent text, making them invaluable for generating reports, articles, or even creative content.

Complex Problem Solving: With their hierarchical reasoning abilities, LCMs are well-suited for tackling complex problems that require multi-step planning and decision-making.

Cross-Modality Applications: LCMs could be used for tasks like video captioning, translating between spoken and written language, or even generating images from text descriptions.

Challenges and Limitations

While LCMs hold immense promise, they're still a relatively new technology with challenges to overcome:

Embedding Space Selection: Finding the right way to represent concepts is crucial for LCM performance.

Joint Encoder & Decoder Training: Currently, LCMs are trained with a fixed encoder. Jointly training the encoder and decoder could lead to even better results.

Concept Granularity: Determining the optimal level of concept abstraction is an ongoing challenge.

Computational Cost: Training and deploying large-scale LCMs can be computationally expensive.

Handling Long Sentences and Maintaining Consistent Quality: Current LCMs face challenges in effectively processing very long sentences and ensuring consistent quality across all supported languages.

Ethical Considerations

As with any powerful AI technology, it's crucial to consider the ethical implications of LCMs:

Bias and Fairness: We need to ensure LCMs are free from bias and treat all individuals and groups fairly.

Misinformation and Manipulation: LCMs could be used to generate convincing but false information, so safeguards are needed to prevent their misuse.

Transparency and Explainability: Understanding how LCMs arrive at their conclusions is important for building trust and accountability.

Privacy and Data Security: Protecting user data and ensuring responsible data handling practices are essential.

The Future of LCMs

LCMs represent a significant leap forward in AI, moving us closer to machines that can truly understand and reason about the world. As research progresses and these models mature, they have the potential to revolutionize various fields, from natural language processing and content creation to human-computer interaction and scientific discovery.

Imagine a future where AI can seamlessly translate between languages, generate creative content, assist in complex decision-making, and even contribute to scientific breakthroughs. LCMs could be the key to unlocking these possibilities and more.

The journey of LCMs is just beginning, and the future holds immense potential for this groundbreaking technology. As we continue to explore and develop LCMs, we must do so responsibly, ensuring they are used for the benefit of humanity and contribute to a more equitable and informed world.